-

Get Cloud GPU Server - Register Now!

Toggle navigation

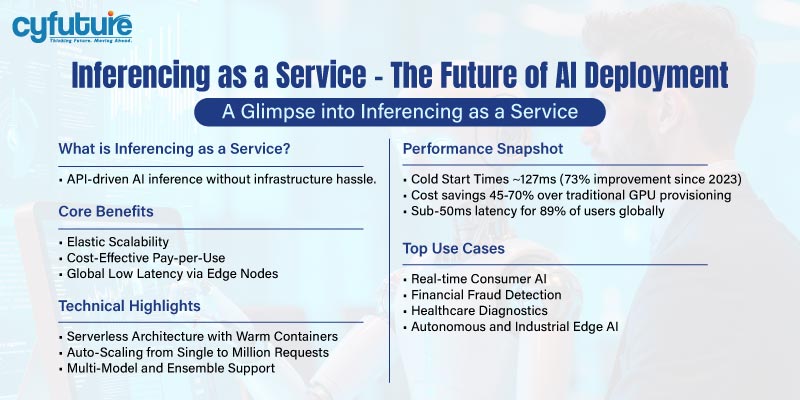

In the rapidly evolving landscape of artificial intelligence, the ability to deploy and scale AI models efficiently has become paramount. Today’s tech leaders, enterprises, and developers face the challenge of balancing performance, cost, and operational complexity as they strive to integrate AI-driven insights into their products and workflows. Inferencing as a Service (IaaS) emerges as a game-changing cloud paradigm that abstracts the intricacies of infrastructure, enabling faster, scalable, and cost-effective AI inference deployment.

Inferencing as a Service provides developers and enterprises with cloud-based platforms to run AI model inference without the traditional hassle of managing dedicated infrastructure such as GPUs, servers, or orchestration systems. In this model, pre-trained AI models are deployed to managed platforms accessible through API endpoints, which automatically handle compute provisioning, scaling, and workload optimization dynamically.

This approach contrasts sharply with classical on-prem or cloud VM-based GPU provisioning, which often requires significant upfront investment, complex setup, and manual capacity management. Inferencing as a Service offers a pay-per-use, serverless, and containerized execution environment that dynamically adjusts resources in response to real-time traffic, eliminating idle compute time and reducing operational costs drastically.

These facts illustrate a seismic shift: enterprises increasingly demand infrastructure that simplifies AI deployment while scaling elastically under unpredictable workloads.

Instead of dedicating fixed GPU cores or servers, the architecture employs event-driven, serverless platforms. When an inference request is received, the service instantly provisions necessary resources, loads the model from optimized storage or warm containers, processes the request, and delivers results — all without any manual server management.

Complex inference pipelines are decomposed into microservices — such as preprocessing, model execution, and post-processing — each independently scalable. This modularity enables granular optimization and faster response times.

Sophisticated algorithms predict and prepare for demand spikes by pre-provisioning resources seconds ahead, eliminating cold starts and ensuring high availability. Load balancers distribute incoming requests effectively across available compute, scaling from single requests to millions concurrently with linear performance scaling.

The service continuously optimizes between CPU and GPU instances depending on model size and application latency requirements. Advanced runtime techniques like dynamic batching, precision scaling (FP32, FP16, INT8), and model quantization maximize throughput while minimizing costs and latency.

Modern platforms support deploying multiple models concurrently, enabling ensemble inference and cascading pipelines where simpler models filter inputs for more complex downstream models, optimizing resource utilization.

Integration with edge compute nodes worldwide reduces round-trip latency for real-time AI applications, such as autonomous vehicles, IoT, and mobile AI, reaching sub-50ms response times for 89% of global users.

Inferencing as a Service represents the next evolution in AI deployment, marrying the power of cloud-native serverless architecture with dynamic orchestration and intelligent scaling. For enterprises and developers, the model translates into faster time-to-market, superior performance, and significantly reduced total cost of ownership. As AI workloads become more critical and complex, adopting a scalable, flexible, and secure inferencing platform like Cyfuture AI’s GPU-enabled infrastructure empowers innovation at unmatched scale.

This shift is pivotal for businesses aiming to integrate AI-driven capabilities seamlessly into their products and operations, without getting bogged down in infrastructure complexities. The future of AI deployment is on-demand, serverless, and efficient — precisely what Inferencing as a Service delivers.

If you want, I can assist with a more detailed deep dive into Cyfuture’s specific Inferencing as a Service offerings or help create tailored technical guides for your teams.