Toggle navigation

Artificial intelligence remains one of the most elusive subjects in computer science notwithstanding the fact that it has been studied for over half a century now. During this period, AI has attracted the attention of many, be it the academia, scientists, filmmakers or artists.

So, how it all began? Which events shaped the development of AI since the time of its inception?

Let us take a look at the history of artificial intelligence covering all the key milestones from the 1950s to the present.

Figure 1: In his book I, Robot Isaac Asimov wrote a story Runaround that listed the three laws of robotics.

In 1942, the renowned science fiction writer Isaac Asimov wrote a story Runaround in his book I, Robot that featured a robot called Speedy. In this story, Asimov listed the three laws of robotics:

First Law: A robot may not injure a human being or, through inaction, allow a human being to come to harm.

Second Law: A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

Third Law: A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

In Runaround, the robot Speedy finds itself in a situation where the third law completely contradicts the first two. The story gained Asimov some science-fiction fans; it also made scientists think about the possibility of machines with intelligence. Even to this day, many AI developers apply the laws of robotics to modern AI.

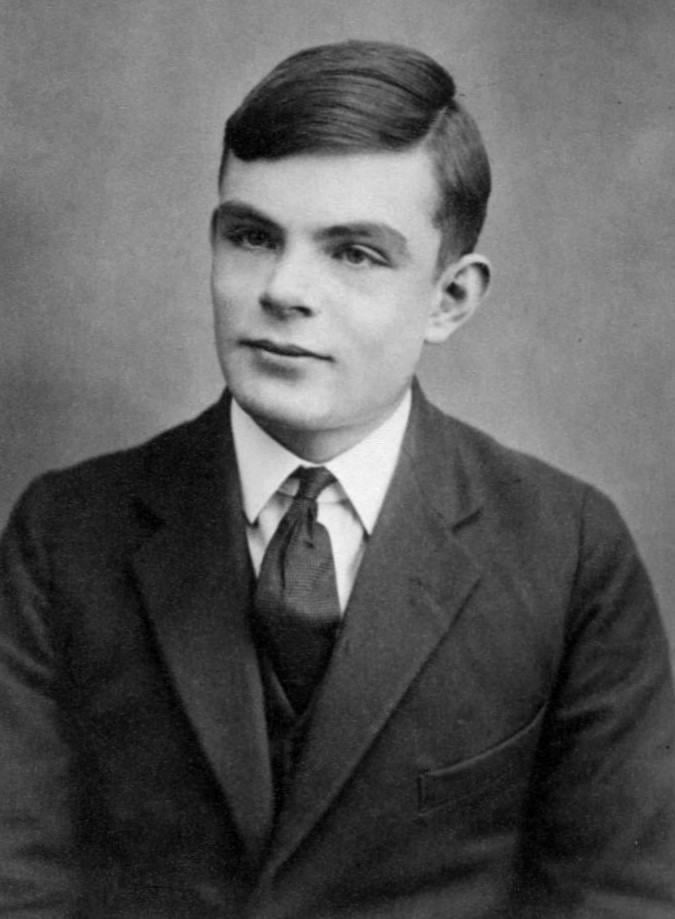

Figure 2: Alan Turing proposed a test for evaluating if machines could think.

Have you watched the 2014 Hollywood flick The Imitation Game featuring Benedict Cumberbatch? That’s inspired by the life of renowned British computer scientist Alan Turing. Turing’s pathbreaking work laid the foundation for the development of AI.

In 1950, Turing published a paper entitled ‘Computing Machinery and Intelligence’.

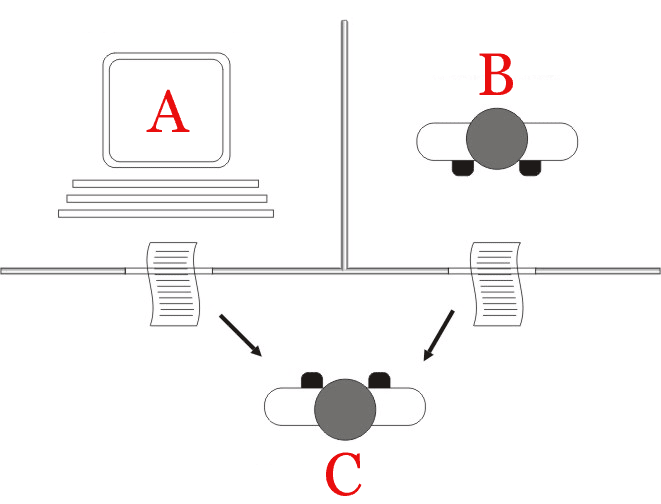

In this paper, he posed a question: ‘Can machines think?’ Turing also proposed a test for evaluating if machines can think. This test called the ‘Imitation Game’, later on, came to be known as the ‘Turing Test’.

In this test, an interrogator was challenged to differentiate between the text-only responses of a machine and a human being. Although no machine could pass the Turing test at that time, the test offered a benchmark for identifying intelligence in a machine.

Figure 3: In Turing Test, the interrogator, player C is asked to determine which player-A or B-is a human and which a computer

Figure 4: The Dartmouth Conference held in 1956 is often regarded as the birthplace of AI

By mid-1950s, computer scientists had begun working on areas such as neural networks and natural language, but there was no unifying concept that covered different kinds of machine intelligence.

In 1956, a mathematics professor from Dartmouth College, John McCarthy and others organised an AI conference at their college. The event held in the summer of 1956 invited some of the brightest minds in computer and cognitive science who discussed the various fields where AI could be potentially applied: reasoning, learning and search, language and cognition, gaming and human interactions with intelligent machines like personal robots.

Interestingly, it was Dartmouth where the term ‘Artificial Intelligence’ was coined and defined by McCarthy.

The conference is often regarded as the birthplace of AI: it organized and energized AI as a field of research.

Figure 5: In 1957, Frank Rosenblatt built the Mark I Perceptron at the Cornell Aeronautical Laboratory.

In 1957, a young research psychologist named Frank Rosenblatt built the Mark I Perceptron at the Cornell Aeronautical Laboratory. The device was an analog neural network constructed in accordance with the biological principles of a human neuron and demonstrated the ability to learn.

Rosenblatt’s Perceptron was simulated on an IBM 704 computer placed in the laboratory. The computer was fed with a series of punch cards. After about 50 trials, it taught itself to distinguish cards marked on the left from the cards marked on the right. As the Perceptron could learn new skills through trial and error, it was considered a quantum leap in the area of intelligent machines and laid the foundation for modern-day neural networks.

Suggested Reading: Professor’s perceptron paved the way for AI – 60 years too soon

Post the Dartmouth conference, key breakthroughs began happening in the area of AI. For this reason, the period from 1956 to 1973 is often called the first summer of AI.

These are some milestone events that took place during this period:

1958: John McCarthy developed a high-level programming language, Lisp while working at the Massachusetts Institute of Technology (MIT). It is a popular programming language for AI research.

1961: Unimate, the first industrial robot, was deployed on an assembly line of General Motors. The robot was used to automate metalworking and welding processes.

1964: Daniel Gureasko Bobrow, an American computer scientist created an AI program called STUDENT. Written in Lisp, the program was designed to read and solve word problems found in high school algebra books.

1965: Joseph Weizenbaum, a German computer scientist at the Massachusetts Institute of Technology created a natural language processing program, ELIZA. The program was designed to imitate a therapist who would ask its users open-ended questions and respond with follow-ups. ELIZA is now regarded as the first chatterbox in the history of artificial intelligence.

Suggested Reading: STORY OF ELIZA, THE FIRST CHATBOT DEVELOPED IN 1966

1966: A group of engineers at the Stanford Research Institute created Shakey, the first general-purpose mobile robot with the ability to reason about its surroundings.

1973: WABOT-1, the first full-scale anthropomorphic robot was created in Japan. The robot had a limb-control system, a vision system and a conversation system. It could converse with a person in Japanese.

Throughout the 1960s, government agencies such as the U.S. Defense Advanced Research Projects Agency (DARPA) granted large funds for AI research and asked little about the results of the research. During this period, many AI researchers often exaggerated the potential of their work for they didn’t want to part with funding.

However, around the late 1960s and early ‘70s, things began to change. Two reports in particular: the ALPAC report for the US government (1966) and the Lighthill report for the British government (1973)-carried out an assessment of research in the field of neural networks and returned a pessimistic forecast on the potential of this technology.

As a result, both the US and the British government began to cut down on funding for university AI research. DARPA that had funded numerous research projects during the 1960s now demanded clear timelines and detailed descriptions of the deliverables for each proposal. These events stagnated the developments in AI and brought in the first winter in the history of AI that lasted till the ‘80s.

The early 1980s witnessed the development of ‘expert systems’ that stored massive amounts of data and were able to imitate the human decision-making process. The technology created at Carnegie Mellon University for Digital Equipment Corporation got rapidly adopted by corporations.

But these expert systems required specialized hardware, so when major equipment manufacturers pulled out of the market in the late ‘80s, the market for expert systems began to decline and eventually collapsed in 1987.

These events plunged the AI industry into another ‘winter’. DARPA which had increased funding following the success of expert systems began to choke off much of its funding except for a few hand-picked projects.

Even the word ‘Artificial Intelligence’ became a taboo among researchers. Most of them began using different terms for AI-based work: ‘machine learning’, ‘analytics’ and ‘informatics’.

The second winter in the history of AI lasted well into the mid-1990s.

Figure 6: In 1997, Deep Blue Chess Computer defeated Garry Kasparov in chess.

In 1997, the public perception of AI was boosted when IBM’s Deep Blue Chess Computer defeated the incumbent world champion, Garry Kasparov, in chess. This match took place live. There were six games, out of which Deep Blue won two games, Kasparov won one and three ended in a draw. Interestingly, Kasparov had defeated an earlier version of Deep Blue the year before.

Deep Blue used a ‘brute force’ method during the game. While a human being can calculate about 50 possible moves ahead, Deep Blue evaluated 200 million possible moves. The computer wasn’t exactly a deep neural network as it used ready-made algorithms and wasn’t learning as it played. It, however, brought AI back into public memory. It also impressed the investors: the value of IBM shares touched an all-time high.

Figure 7: Jeff Dean and Andrew Ng’s experiment marked the beginning of the Google Brain Project.

Around 2011, the industry witnessed computer scientists across the world creating artificial neural networks. The same year Google engineer Jeff Dean and Stanford professor Andrew Ng set out to create a large neural network backed by Google’s servers.

The neural network used the computing power of around 16,000 servers. Dean and Ng fed the network with 10 million random frames and videos from YouTube. They didn’t ask the network to come up with any specific information. When neural networks run in an unsupervised manner, they try to find patterns within the data and classify the information.

The data processing went on for 3 days at the end of which the neural network returned an output containing 3 blurry images depicting visual patterns it had detected in the test images: a cat, a human face and a human body. This experiment was a major breakthrough in the field of deep neural networks and unsupervised learning. It also marked the beginning of the Google Brain project.

Interestingly, 2011 was also the year when Apple released Siri, an AI-based virtual assistant for iOS operating systems. Siri used natural-language user interface to observe, answer and recommend things to human users.

In 2012, University of Toronto professor Geoffrey Hinton along with two of his students created a neural network model AlexNet to compete in an image recognition competition called ImageNet. The contenders had to use their systems to process millions of images and identify them with the highest possible accuracy. AlexNet won the competition. It showed an error rate of just 15.3%, less than half of the runner-up.

AlexNet’s winning the contest made a strong case that deep neural networks were far superior to other systems in accurately identifying and classifying images. The event triggered a series of developments in deep neural networks and AI, and Hinton received the coveted Turing Prize in 2018.

Figure 8: In 2016, AlphaGo defeated the greatest Go player in the world, Lee Sedol.

In 2013, a British company DeepMind published a paper showing how they could use neural networks to play and beat 50 old Atari games. Impressed with the findings, Google acquired the company in 2014. A few years later, the scientists at DeepMind developed a neural network model AlphaGo that was designed to play the Japanese board game Go. The program played thousands of games against other AlphaGo versions and learnt in the process.

In 2016, AlphaGo defeated the greatest Go player in the world, Lee Sedol with a score of 4:1.

In 2017, the researchers at the Facebook Artificial Intelligence Research lab (FAIR) made an unexpected discovery while trying to study chatbots.

These researchers had allowed the chatbots, or ‘dialog agents’ to converse freely as they felt it would strengthen their communication skills. But after some time, the chatbots began deviating from the script and started communicating in a new language-the one they created without human intervention. Here’s what the conversation between them looked like:

|

Bob: i can i i everything else . . . . . . . . . . . . . . Alice: balls have zero to me to me to me to me to me to me to me to me to Bob: you i everything else . . . . . . . . . . . . . . Alice: balls have a ball to me to me to me to me to me to me to me Bob: i i can i i i everything else . . . . . . . . . . . . . . Alice: balls have a ball to me to me to me to me to me to me to me Bob: i . . . . . . . . . . . . . . . . . . . Alice: balls have zero to me to me to me to me to me to me to me to me to Bob: you i i i i i everything else . . . . . . . . . . . . . . Alice: balls have 0 to me to me to me to me to me to me to me to me to Bob: you i i i everything else . . . . . . . . . . . . . . Alice: balls have zero to me to me to me to me to me to me to me to me to |

Eventually, Facebook had to shut down this AI experiment as it wasn’t going in the intended direction. The fiasco demonstrated the potential of the technology: what AI could do if left unchecked.

In January 2018, two AI programs created by Alibaba and Microsoft beat humans in a Stanford University reading comprehension test.

The test, known as the Stanford Question Answering Dataset asked contestants to provide answers to more than 100,000 questions taken from over 500 Wikipedia articles.

While the program created by Alibaba scored 82.44% out of 100, the one built by Microsoft scored 82.605%. In contrast, the best score of a person was 82.304%.

Today in 2020, organisations in all verticals are embracing and experimenting with AI. From virtual assistants and humanoid robots to self-driving cars and personalised shopping experience, AI is slowly and steadily pervading all aspects of our life. According to a recent study by PwC, the technology could contribute up to $15.7 trillion to the global economy in 2030. Every now and then, innovations in the AI realm are making waves across the planet. So, in the next decade and beyond, we will witness AI opening doors to newer possibilities.

Thanks for reading.

informative post! AI is stepping into every industry.