-

Get Cloud GPU Server - Register Now!

Toggle navigation

Is the cloud adequate for our ever-expanding digital universe, or is there something else we’re missing? This question persists in the thoughts of technologists and enterprises alike as they confront the accelerating growth of data and the increasing demand for faster and more effective computing solutions. A paradigm shift is underway in the quest for answers, one that will bring the cloud closer to the ground. Greetings to the edge computing realm, wherein the distinctions between the virtual realm of the cloud and the tangible world around us blur, presenting unprecedented prospects and formidable challenges.

However, the emergence of edge computing has challenged the dominance of central cloud platforms, bringing computation closer to the point where information is created and consumed. However, what exactly is edge computing, and how does it alter our perception of computing resources?

Edge computing is the concept of bringing computational resources closer to the data source, frequently at the network edge, as opposed to solely relying on distant data centers. This proximity enables real-time processing and analysis, which makes it ideal for applications requiring low latency and high bandwidth, such as autonomous vehicles and augmented reality. Furthermore, edge computing enhances information privacy and security by minimizing the necessity of transferring sensitive data over extended distances.

In this comprehensive piece, we will focus on the world of edge computing as we explore its basics, uses, perks, and obstacles. Furthermore, we will shed light on the transformative power of edge computing and how it can influence the future of cloud computing infrastructure services by delving into its complexities.

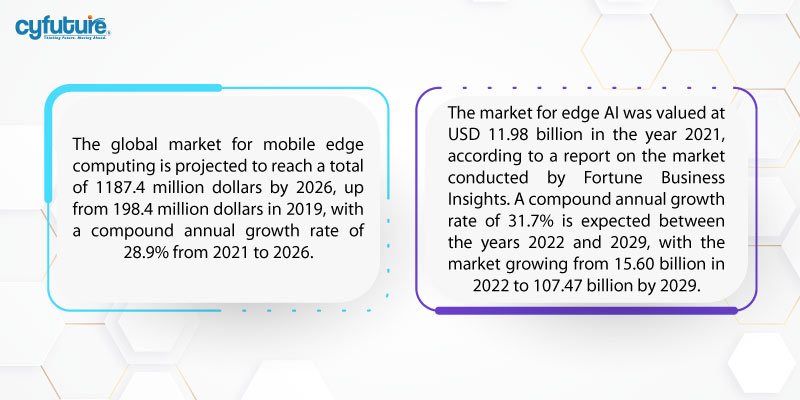

However, let’s take a look into a few stats and data before delving deep.

Now, let’s unravel the mysteries of edge computing and discover how the cloud connects to the real world.

A significant shift in IT infrastructure is happening with edge computing, transforming how data is handled and analyzed. The source of data generation and collection is closer to the source of computing resources than conventional cloud computing. It’s not just about reducing latency; it’s also about fundamentally changing how we handle information.

Edge computing is the answer to the growing demand for real-time processing and analysis. Edge computing is the answer to this growing demand. A network of edge devices and servers is strategically positioned to process data as close to its source as possible. This method speeds up data collection and analysis, making it ideal for applications that require quick and decisive actions.

It is important to acknowledge that Edge Computing is not a substitute for cloud computing or conventional data centers, but rather an enhancement and extension of the entire IT ecosystem. Several cutting-edge technologies are combined to create a powerful computing paradigm. These technologies are

High-speed, low-latency connectivity has been unleashed by the introduction of 5G networks, laying the groundwork for Edge Computing’s real-time capabilities.

Have you heard?

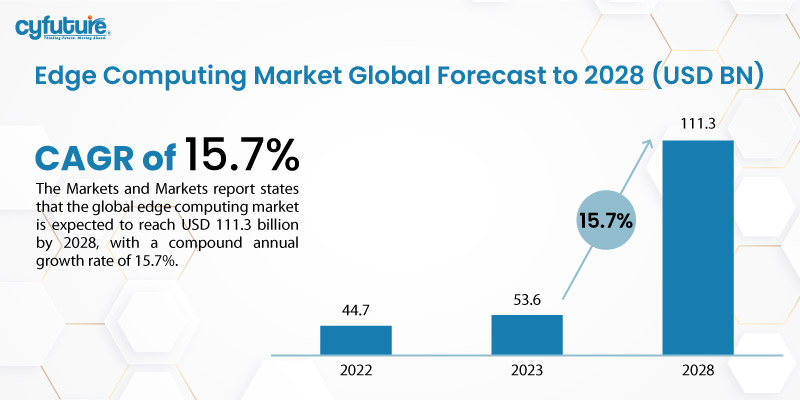

The Markets and Markets report states that the global edge computing market is expected to reach USD 111.3 billion by 2028, with a compound annual growth rate of 15.7%.

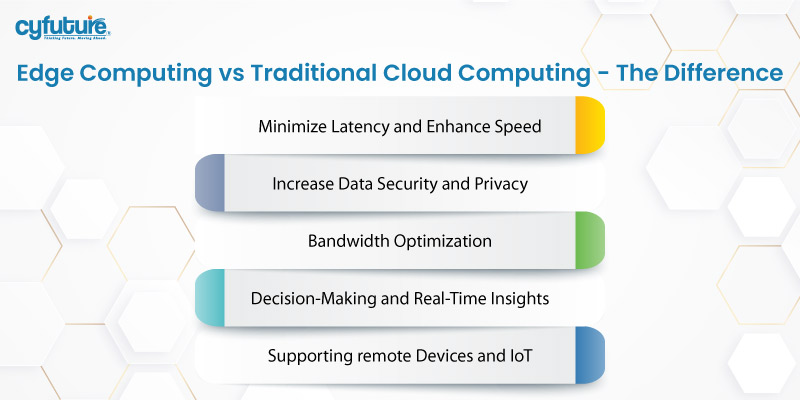

The fundamental difference between edge computing and traditional cloud computing lies in the location of data processing. Traditional cloud computing requires sending data to data centers in India for processing and analysis. Data is processed as close as possible to its origin, be it a sensor, gadget, or application.

Several critical advantages can be provided by this fundamental difference.

Edge Computing significantly reduces the time it takes for data to travel from its source to the processing point, leading to near-instantaneous responses. This reduced latency greatly benefits applications that require real-time information, such as autonomous vehicles or virtual reality.

Data storage and processing at the edge reduces the need to send sensitive info to distant servers. This architectural choice improves data privacy and security, addressing concerns regarding data breaches and compliance.

The quantity of data that must be transmitted to centralized servers is reduced by processing it locally at the edge. This bandwidth efficiency helps alleviate network congestion and reduce data transmission costs by improving bandwidth efficiency.

Data at the edge is analyzed efficiently by organizations, offering instant insights and accelerated decision-making. In situations where instant decisions are crucial, this agility is invaluable.

IoT and remote devices that produce large amounts of data benefit from edge computing. It is ideal for remote or mobile applications because it facilitates efficient processing and analysis without relying on constant connectivity to the cloud.

Computing tasks necessitate appropriate architectures, and an architecture that is suitable for one type of computing task may not necessarily be suitable for all types of computing tasks. Edge computing has emerged as a viable and important architecture that supports distributed computing, ideally in the same physical location as the data source. In general, distributed computing models are not novel, and the notions of remote offices, branch offices, data center colocation, and cloud computing have a long and proven track record.

However, decentralization can pose a challenge as it necessitates extensive monitoring and control that can be easily overlooked when transitioning away from a conventional centralized computing model. Edge computing has become relevant because it provides an effective solution to emerging network problems associated with moving enormous volumes of data that today’s organizations produce and consume. It is not simply a matter of quantity. Furthermore, applications depend on processing and responses that are increasingly time-sensitive.

Take a look at the emergence of autonomous vehicles. Intelligent traffic control signals will be the foundation for them. Real-time data will be needed for auto and traffic management systems. When you add this requirement to the huge number of autonomous vehicles, the scope of the potential problems becomes clearer. A responsive and fast network is needed. Fog and edge computing tackle three major network drawbacks: bandwidth, latency, and network inconsistency.

The bandwidth of a network is the amount of information it can transmit over time, usually expressed in bits per second. The limits for wireless communication are more severe than for all networks. This implies that there is a predetermined limit on the quantity of data that can be transmitted across the network. It’s possible to boost network bandwidth to accommodate more gadgets and information, but it’s costly. It doesn’t fix other issues because of the higher limits.

The time it takes to transfer information between two points on a network is called the latency. Data movement across the network can be slowed down by long physical distances and network congestion, even though communication should happen at light speed. This impedes any analytics and decision-making procedures and affects the system’s capacity to respond promptly. In the case of the self-driving vehicle, it led to the loss of lives.

A global network of networks is what the internet is. The internet has evolved to provide adequate general-purpose data exchanges for most everyday computing tasks, such as file transfers or basic streaming, but the sheer number of devices involved can overwhelm it, leading to excessive traffic and requiring time-consuming data retransmissions. When the internet goes down, the congestion and even the communication with certain internet users may get worse, rendering the internet useless.

The advent of edge computing entails numerous advantages, but it’s not without its drawbacks and considerations. Please keep the following critical points in mind:

The administration of a distributed edge network can be a challenging undertaking. It calls for specialized skills and knowledge in remote device administration, network setup, and security protocols. To ensure that their edge networks are effectively managed, organizations must invest in training and resources.

In remote or unsupervised settings, edge gadgets are more vulnerable to physical manipulation or online assaults. Providing robust security safeguards at the boundary is crucial. Security monitoring and encryption are included.

Organizations must establish clear data governance policies, with data increasingly being processed and stored at the edge. Compliance with data regulations and standards should be addressed by these policies.

Considering scalability is crucial as the number of edge gadgets and servers grows. Designing systems that can handle a growing number of information and gadgets is crucial. Effective management strategies and a robust infrastructure are essential for a robust infrastructure.

Keeping edge gadgets from different makers in sync is a challenge. This issue is being addressed through standardization efforts, but organizations must be cautious when selecting gadgets and platforms that enable interoperability.

Despite its potential savings in bandwidth, organizations must take into account the initial set-up costs, ongoing maintenance, and the total cost of ownership. Budgeting should encompass both short- and long-term expenditures.

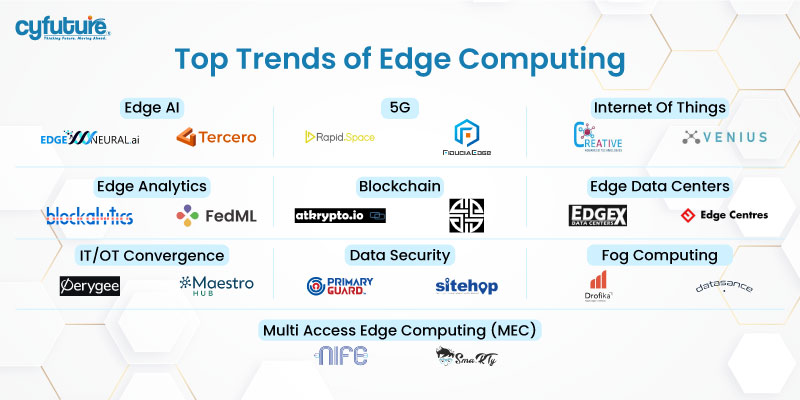

Here is a list of several exciting developments and trends that marked the future of edge computing. So, let’s examine each of them!

The introduction of 5G networks has profound implications for the field of edge computing. There will be an increased number of real-time applications and services at the edge thanks to high-speed and low-latency connectivity.

Artificial intelligence and machine learning algorithms can be run locally on edge computing devices. Intelligent edge applications and enhanced decision-making capabilities will be a result of this trend.

Edge AI chips are being developed to accelerate AI workloads on edge devices. High performance and efficiency are the goals of these chips.

A standard environment for deploying and managing edge applications is provided by edge cloud platforms. Organizations can speed up Edge Computing deployments with this platform.

Many organizations are adopting hybrid computing architectures, which combine centralized cloud resources with edge computing. This enables them to take advantage of the advantages of both approaches.

Collaboration and decision-making are enabled by edge devices communicating directly with one another. This could result in quicker response times and more effective data handling.

In conclusion, edge computing represents a pivotal moment where the cloud merges with the real world in the constantly evolving realm of digital technology. Traditional cloud architectures are facing challenges in meeting these requirements as the demand for faster processing and real-time analysis continues to grow. Edge computing offers a compelling solution that brings computational resources closer to data sources, reducing latency, enhancing security, and optimizing bandwidth.

Understanding edge computing requires a deep understanding of its basic principles and how it differs from the more conventional cloud. It’s not a replacement, but an enhancement, leveraging technologies like 5G networks, superfast computing, artificial intelligence/machine learning, IoT gadgets, and enhanced edge security to transform data handling and analysis.

There is substantial growth expected in sectors such as mobile edge computing and edge AI, according to market projections for edge computing. Data governance, scalability, interoperability, and cost management are some of the challenges associated with adopting edge computing.

Edge computing looks promising with advancements in 5G integration, edge AI chips, edge cloud platforms, hybrid architectures, and edge-to-edge communications on the horizon. To unlock the full potential of edge computing, organizations must navigate these challenges and take advantage of the opportunities it presents.

Are you ready for the transforming power of cutting-edge technology? As we navigate the path toward a more connected and efficient digital future, we invite you to explore its intricacies, benefits, and obstacles with us. Join the cutting-edge computing revolution today.

Edge computing has transformative implications for various industries and applications. It enables industries like manufacturing, healthcare, transportation, and retail to leverage real-time data processing for enhanced operational efficiency, predictive maintenance, autonomous vehicles, augmented reality, and more immersive customer experiences.

The introduction of 5G networks significantly enhances the capabilities of edge computing by providing high-speed, low-latency connectivity. This enables a wide range of real-time applications and services at the edge, including autonomous vehicles, remote healthcare monitoring, smart cities, and immersive gaming experiences.

No, edge computing is not a replacement for cloud computing but rather a complementary technology. While cloud computing remains essential for storing and processing large volumes of data, edge computing extends the capabilities of the cloud by enabling real-time processing and analysis closer to where data is generated, leading to improved performance and efficiency.

While edge computing offers significant advantages, it also presents challenges such as managing distributed infrastructure, ensuring security across edge devices, addressing data governance issues, achieving interoperability among different devices and platforms, and effectively managing costs associated with deployment and maintenance.

The prospects for the future of edge computing appear promising, as innovations such as edge AI chips, hybrid architectures, and edge-to-edge communications are anticipated to propel innovation. The future of computing infrastructure will be impacted by edge computing as organizations continue to embrace digital transformation.