2262 Views

2262 Views

The subject of artificial intelligence (AI) and machine learning (ML) has for some time been growing very fast because of the advances in computational capabilities and software frameworks. Leading this radical change is NVIDIA, with its GPU Cloud (NGC) platform, which has been vital to making AI capabilities available to everyone.

In this blog post, we will be discussing NVIDIA’s GPU Cloud technology and the impact it has on AI and ML development.

Introduction to NVIDIA GPU Cloud

NVIDIA GPU Cloud (NGC) is a cloud-based platform that is specially built for AI developers. NGC helps AI developers by allowing them to get complete access to the right tools and the best GPU computing resources. The platform was made public so that developers who are faced with the task of integrating multifunctional software and accessing supercomputers could meet their needs.

NGC is a repository of the type of software that is packaged in containers, which is a stack of all the necessary software and different deep learning frameworks. The combination of TensorFlow, PyTorch, and Caffe, along with NVIDIA’s innovative libraries (cuDNN & CUDA), makes

containerized software available in the NGC market.

Key Benefits of NVIDIA GPU Cloud

- Optimized Performance: NGC was designed to function with NVIDIA GPUs, enabling the highest possible performance when accomplishing deep learning tasks. The software stack, which is in containers, is certified and regularly updated by NVIDIA to work without a hitch on NVIDIA GPUs both on-premises and in the cloud.

- Simplified Integration: Developers are able to avoid confronting the complexity of combining different software stacks manually by using NGC. NGC allows you to use the pre-integrated containers to start deep learning projects right away, which results in you saving not only time but also effort.

- Flexibility and Scalability: NGC provides the deployment option that is flexible enough for developers to be able to carry out different tasks such as a single application on a GPU, increase operating power with NVIDIA DGX systems, or take advantage of cloud services like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP).

- Cost Efficiency: The users just have to pay for the computing resources which they actually use, and thus, it is a solution that is very cost-effective when projects require different levels of processing power.

Impact on AI and Machine Learning Development

- Accelerated Development Cycle: The NGC, thus, by means of instantaneous access to the right frameworks and computing resources, speeds up the development cycle for AI and ML projects.

- Enhanced Collaboration: NGC, being cloud-based, allows multiple teams to work on the same projects simultaneously, no matter where the developers are located. Thus, it improves the spirit of collaboration among them. A typical example is projects for AI, which perform at a large scale and require developers from different locations to work in parallel.

- Democratization of AI: NGC provides easier access to AI development tools and thus makes it possible for more people and organizations to work on AI and ML projects. As a result, a great variety of AI applications has emerged in different business fields, including healthcare and finance.

Future Developments and Partnerships

NVIDIA’s partnerships with cloud providers are constantly increasing. One of their recent moves includes the Blackwell GPU platform, which will connect with Amazon’s AWS, Microsoft’s Azure, and Google’s GCP. Apart from that, Google’s Vertex AI and JAX framework will be better supported by NVIDIA GPUs. These joint ventures signal NVIDIA’s continuous dedication to machine learning applications in hardware and software.

Here’s a breakdown of the key developments and partnerships:

Integration of NVIDIA’s Blackwell GPU Platform

NVIDIA is absolutely announcing that the Blackwell GPU platform will be available with Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). This news is indeed very important owing to the fact that the Blackwell platform, which the GB200 Grace Blackwell Superchip powers, is a quantum leap in GPU computing, especially for AI training (generative AI) and big language models (LLMs).

- AWS: The Blackwell platform will be offered on AWS through new EC2 instances and DGX Cloud, thus improving the performance for multi-trillion parameter LLMs. The Nitro System and Elastic Fabric Adapter of AWS will also be integrated with the Blackwell encryption to ensure the vigorous security of AI applications.

- Azure: Microsoft is keen on launching the Blackwell GPU platform in Azure by integrating it with Microsoft Fabric to provide AI performance, as said in times.

- GCP: Google Cloud services are also set to use the Grace Blackwell AI computing platform and even the NVIDIA DGX Cloud service to further their AI infrastructure.

Enhanced Support for Google’s Vertex AI and JAX Framework

Despite the search results not explicitly talking about better performance of Google’s Vertex AI and JAX framework on NVIDIA GPUs, a very common occurrence with NVIDIA is that NVIDIA aims to make the GPUs capable of running different AI frameworks efficiently by improving their performance. It is more than likely that the companies involved in cloud computing have their cloud infrastructure designed for GPU-optimized AI work.

In this regard, it is assuredly influenced by the fact that the target audience is AI developers who are going to use Nvidia GPUs to run AI workloads on different platforms more effectively. So, the driving force behind this is NVIDIA’s cloud solutions, which can handle AI workloads more powerfully across different platforms.

Commitment to Advancing AI Computing

These collaborations demonstrate that NVIDIA is dedicated to enhancing AI computing infrastructure and services. The company is making it possible for developers and organizations to build and deploy AI applications more easily and safely by combining its state-of-the-art GPU technology with the best cloud services. NVIDIA’s strategic stance here gives it a lead position in the AI infrastructure sector and is also the source of innovation and the spreading of AI across industries.

How does NVIDIA’s partnership with major cloud providers enhance AI computing infrastructure?

NVIDIA’s partnerships with major cloud providers significantly enhance AI computing infrastructure in several ways:

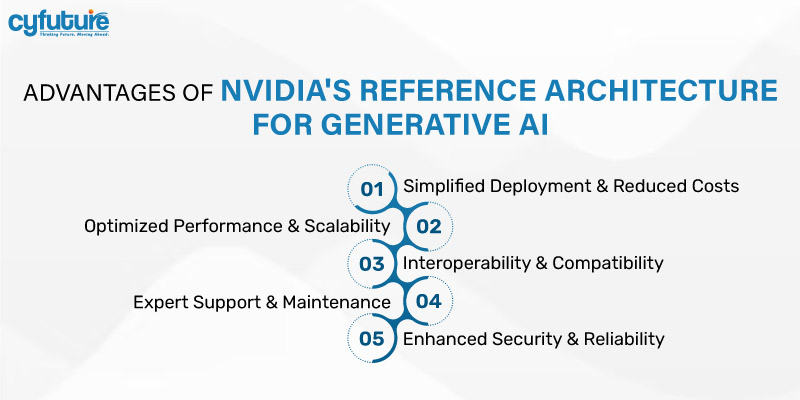

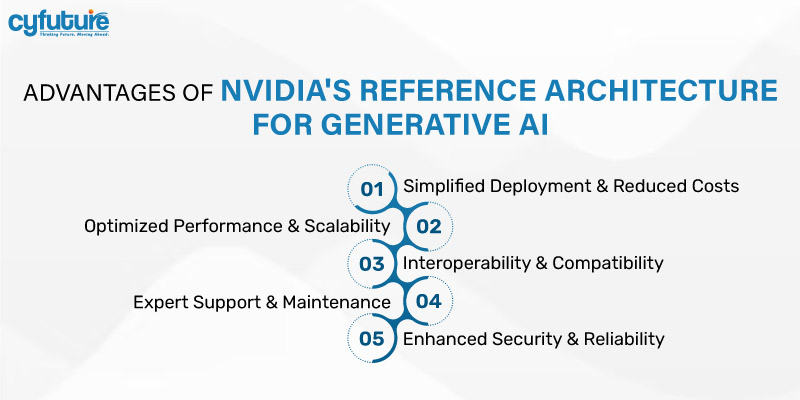

Optimized Performance and Scalability

- Utilizing the GPU technology of NVIDIA: Utilizing the high-performance GPUs of NVIDIA, cloud providers like the H100 and Grace Blackwell platforms can confer this new and optimized AI workload performance to AI for better alignment of the system capabilities. To put it simply, the change allows programmers to take up a more challenging task and train and deploy much larger language models and generative AI applications with the same budget and time frame more effectively.

- Scalability: The planned tie-ups and active collaboration of AI partners are the reason why the device’s AI systems are dynamic, influential, and have the quality level to care for growing needs and challenges. It is important to have an adaptive infrastructure for the launch of new AI products and solutions and the safe planning of large AI initiatives.

Simplified Deployment and Management

- Reference Architectures: NVIDIA shares some of its reference architectures, including the NVIDIA Cloud Partner (NCP) architecture. This assists cloud providers in creating secure, high-performance data centres for AI services. Consequently, the deployment process is simplified and brings about a reduction in the time and cost associated with setting up AI infrastructure.

- DGX Cloud Service: The fact that NVIDIA’s DGX Cloud service is now available on Google Cloud or similar platforms means that developers can quickly get hold of already pre-configured AI environments without the need to go through the process of setting up their own and that would accelerate the journey the AI applications take from the ideas stage to the profitable stage.

Enhanced Collaboration and Accessibility

- Collaborative Ecosystem: Nunes Favicon Matches The primary partnership between NVIDIA and cloud providers is a collaborative ecosystem, thus allowing developers to reach a gamut of AI tools and frameworks. It means, of course, support for things like JAX and Vertex AI, which in turn brings us to the conclusion that AI Development is no longer exclusively the domain of a certain elite who can afford the tech talent but a matter of people from broad society winning access to such tools of AI.

- Cost Efficiency: Cloud-based infrastructure has made it possible for companies to utilize AI without necessarily investing heavily in the high costs of AI infrastructure. Consequently, this makes AI accessible to businesses regardless of their scale.

Advanced AI Capabilities

- Generative AI Support: NVIDIA and cloud providers, such as Google, are combining their efforts to support generative AI and large language models that will enable developers to create and deploy more sophisticated AI applications more easily. For instance, they will use NVIDIA’s Blackwell platform for real-time inference on trillion-parameter models.

- Innovation and Research: By working together, NVIDIA and cloud providers such as Google are pushing the envelope of AI innovation; as researchers and developers are given access to the latest tools and infrastructure, this will lead to innovations in AI research and application.

Conclusion

The AI project from NVIDIA has significantly impacted the AI industry by equipping developers with an effective, customizable, and easy-to-use platform for AI application construction and deployment. In the global economy, as AI influences business sectors, the part NGC is playing in the advancement of AI by putting AI within the reach of more companies has not changed and will continue to be very important. NGC will still be a relevant factor in that it will be the driving force in making other industries benefit from it.

To summarize, AI is in the driving seat, and it has many industries on board, but NGC will still be a relevant factor in the adoption of AI in these industries.

Related Posts in This Category